FATAL: too large gitlab pipeline 😎

ERROR: Uploading artifacts as "archive" to coordinator... too large archiveI upgraded some node versions in my GitLab CI pipeline. After upgrading the versions, I ran the pipeline and received an error that said ERROR: Uploading artifacts as “archive” to coordinator… too large archive 🔻

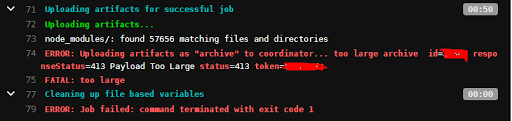

node_modules/: found 57656 matching files and directories

ERROR: Uploading artifacts as "archive" to coordinator... too large archive id=31845 responseStatus=413 Payload Too Large status=413 token=YGCc9B7z

FATAL: too large

Cleaning up file based variables

00:00

ERROR: Job failed: command terminated with exit code 1I spent some time debugging this error and eventually fixed it. The problem was with the maximum artifact size limit. The maximum artifacts limit in my Gitlab was set to 100MB, which is the default artifact upload size limit. Here are the steps to correct this error.

To change this settings, Go Into your Gitlab, Go to Admin settings > Continuous Integration and Deployment > Maximum artifacts size (MB) and increase the value of the artifact size.

Note : That option is only available for self-managed GitLab instances, not GitLab.com. The default value for Gitlab.com SaaS is 1 GB.

You can set this limit to a different level :

1. Instance level

Go to Admin Area > Settings > CI/CD and change the value of maximum artifacts size.2. Project level

Go to Project's Settings > CI/CD > General Pipelines and change the value of maximum artifacts size.3. Group level

Go to the Group's Settings > CI/CD > General Pipelines and change the value of maximum artifacts size.And click on the save changes and re-run the pipeline. 😍😍😍

You may like this : How to setup Nginx ingress using helm

No design skills? No problem. Checkout how it’s work! 🌈 😍 🔥 🎨

Checkout Purple Photo to create beautiful posts with zero designing skills.

You save my time Thanks.

Nice

Nice! o/